[한국어] Multiagent Bidirectional- Coordinated Nets for Learning to Play StarCraft Combat Games

•

9 j'aime•2,388 vues

The document summarizes a presentation on a paper about using multiagent bidirectional-coordinated networks (BiCNet) to develop AI agents that can learn to play combat games in StarCraft. The paper introduces BiCNet, which uses bidirectional RNNs to allow agents to communicate and coordinate their actions. Experiments show BiCNet agents outperform independent and other cooperative agents in different combat scenarios in StarCraft, developing strategies like focus firing and coordinated attacks. Visualizations of agent coordination and additional areas for investigation are also discussed.

Signaler

Partager

Signaler

Partager

Télécharger pour lire hors ligne

Recommandé

For the full video of this presentation, please visit:

https://www.embedded-vision.com/platinum-members/pathpartner/embedded-vision-training/videos/pages/may-2019-embedded-vision-summit

For more information about embedded vision, please visit:

http://www.embedded-vision.com

Praveen Nayak, Tech Lead at PathPartner Technology, presents the "Using Deep Learning for Video Event Detection on a Compute Budget" tutorial at the May 2019 Embedded Vision Summit.

Convolutional neural networks (CNNs) have made tremendous strides in object detection and recognition in recent years. However, extending the CNN approach to understanding of video or volumetric data poses tough challenges, including trade-offs between representation quality and computational complexity, which is of particular concern on embedded platforms with tight computational budgets. This presentation explores the use of CNNs for video understanding.

Nayak reviews the evolution of deep representation learning methods involving spatio- temporal fusion from C3D to Conv-LSTMs for vision-based human activity detection. He proposes a decoupled alternative to this fusion, describing an approach that combines a low-complexity predictive temporal segment proposal model and a fine-grained (perhaps high- complexity) inference model. PathPartner Technology finds that this hybrid approach, in addition to reducing computational load with minimal loss of accuracy, enables effective solutions to these high complexity inference tasks."Using Deep Learning for Video Event Detection on a Compute Budget," a Presen...

"Using Deep Learning for Video Event Detection on a Compute Budget," a Presen...Edge AI and Vision Alliance

Recommandé

For the full video of this presentation, please visit:

https://www.embedded-vision.com/platinum-members/pathpartner/embedded-vision-training/videos/pages/may-2019-embedded-vision-summit

For more information about embedded vision, please visit:

http://www.embedded-vision.com

Praveen Nayak, Tech Lead at PathPartner Technology, presents the "Using Deep Learning for Video Event Detection on a Compute Budget" tutorial at the May 2019 Embedded Vision Summit.

Convolutional neural networks (CNNs) have made tremendous strides in object detection and recognition in recent years. However, extending the CNN approach to understanding of video or volumetric data poses tough challenges, including trade-offs between representation quality and computational complexity, which is of particular concern on embedded platforms with tight computational budgets. This presentation explores the use of CNNs for video understanding.

Nayak reviews the evolution of deep representation learning methods involving spatio- temporal fusion from C3D to Conv-LSTMs for vision-based human activity detection. He proposes a decoupled alternative to this fusion, describing an approach that combines a low-complexity predictive temporal segment proposal model and a fine-grained (perhaps high- complexity) inference model. PathPartner Technology finds that this hybrid approach, in addition to reducing computational load with minimal loss of accuracy, enables effective solutions to these high complexity inference tasks."Using Deep Learning for Video Event Detection on a Compute Budget," a Presen...

"Using Deep Learning for Video Event Detection on a Compute Budget," a Presen...Edge AI and Vision Alliance

Contenu connexe

Tendances

Tendances (20)

제 17회 보아즈(BOAZ) 빅데이터 컨퍼런스 - [6시내고양포CAT몬] : Cat Anti-aging Project based Style...![제 17회 보아즈(BOAZ) 빅데이터 컨퍼런스 - [6시내고양포CAT몬] : Cat Anti-aging Project based Style...](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

![제 17회 보아즈(BOAZ) 빅데이터 컨퍼런스 - [6시내고양포CAT몬] : Cat Anti-aging Project based Style...](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

제 17회 보아즈(BOAZ) 빅데이터 컨퍼런스 - [6시내고양포CAT몬] : Cat Anti-aging Project based Style...

제 16회 보아즈(BOAZ) 빅데이터 컨퍼런스 - [기린그림 팀] : 사용자의 손글씨가 담긴 그림 일기 생성 서비스![제 16회 보아즈(BOAZ) 빅데이터 컨퍼런스 - [기린그림 팀] : 사용자의 손글씨가 담긴 그림 일기 생성 서비스](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

![제 16회 보아즈(BOAZ) 빅데이터 컨퍼런스 - [기린그림 팀] : 사용자의 손글씨가 담긴 그림 일기 생성 서비스](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

제 16회 보아즈(BOAZ) 빅데이터 컨퍼런스 - [기린그림 팀] : 사용자의 손글씨가 담긴 그림 일기 생성 서비스

人が注目する箇所を当てるSaliency Detectionの最新モデル UCNet(CVPR2020)

人が注目する箇所を当てるSaliency Detectionの最新モデル UCNet(CVPR2020)

[222]딥러닝을 활용한 이미지 검색 포토요약과 타임라인 최종 20161024![[222]딥러닝을 활용한 이미지 검색 포토요약과 타임라인 최종 20161024](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

![[222]딥러닝을 활용한 이미지 검색 포토요약과 타임라인 최종 20161024](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

[222]딥러닝을 활용한 이미지 검색 포토요약과 타임라인 최종 20161024

제 15회 보아즈(BOAZ) 빅데이터 컨퍼런스 - [개미야 뭐하니?팀] : 투자자의 반응을 이용한 실시간 등락 예측(feat. 카프카)![제 15회 보아즈(BOAZ) 빅데이터 컨퍼런스 - [개미야 뭐하니?팀] : 투자자의 반응을 이용한 실시간 등락 예측(feat. 카프카)](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

![제 15회 보아즈(BOAZ) 빅데이터 컨퍼런스 - [개미야 뭐하니?팀] : 투자자의 반응을 이용한 실시간 등락 예측(feat. 카프카)](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

제 15회 보아즈(BOAZ) 빅데이터 컨퍼런스 - [개미야 뭐하니?팀] : 투자자의 반응을 이용한 실시간 등락 예측(feat. 카프카)

제 15회 보아즈(BOAZ) 빅데이터 컨퍼런스 - [MarketIN팀] : 디지털 마케팅 헬스체킹 서비스![제 15회 보아즈(BOAZ) 빅데이터 컨퍼런스 - [MarketIN팀] : 디지털 마케팅 헬스체킹 서비스](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

![제 15회 보아즈(BOAZ) 빅데이터 컨퍼런스 - [MarketIN팀] : 디지털 마케팅 헬스체킹 서비스](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

제 15회 보아즈(BOAZ) 빅데이터 컨퍼런스 - [MarketIN팀] : 디지털 마케팅 헬스체킹 서비스

제 16회 보아즈(BOAZ) 빅데이터 컨퍼런스 - [코끼리책방 팀] : 사용자 스크랩 내용 기반 도서 추천 ![제 16회 보아즈(BOAZ) 빅데이터 컨퍼런스 - [코끼리책방 팀] : 사용자 스크랩 내용 기반 도서 추천](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

![제 16회 보아즈(BOAZ) 빅데이터 컨퍼런스 - [코끼리책방 팀] : 사용자 스크랩 내용 기반 도서 추천](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

제 16회 보아즈(BOAZ) 빅데이터 컨퍼런스 - [코끼리책방 팀] : 사용자 스크랩 내용 기반 도서 추천

제 15회 보아즈(BOAZ) 빅데이터 컨퍼런스 - [Indus2ry 팀] : 2022산업동향- 편의점 & OTT 완벽 분석![제 15회 보아즈(BOAZ) 빅데이터 컨퍼런스 - [Indus2ry 팀] : 2022산업동향- 편의점 & OTT 완벽 분석](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

![제 15회 보아즈(BOAZ) 빅데이터 컨퍼런스 - [Indus2ry 팀] : 2022산업동향- 편의점 & OTT 완벽 분석](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

제 15회 보아즈(BOAZ) 빅데이터 컨퍼런스 - [Indus2ry 팀] : 2022산업동향- 편의점 & OTT 완벽 분석

제 16회 보아즈(BOAZ) 빅데이터 컨퍼런스 - [하둡메이트 팀] : 하둡 설정 고도화 및 맵리듀스 모니터링![제 16회 보아즈(BOAZ) 빅데이터 컨퍼런스 - [하둡메이트 팀] : 하둡 설정 고도화 및 맵리듀스 모니터링](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

![제 16회 보아즈(BOAZ) 빅데이터 컨퍼런스 - [하둡메이트 팀] : 하둡 설정 고도화 및 맵리듀스 모니터링](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

제 16회 보아즈(BOAZ) 빅데이터 컨퍼런스 - [하둡메이트 팀] : 하둡 설정 고도화 및 맵리듀스 모니터링

제 15회 보아즈(BOAZ) 빅데이터 컨퍼런스 - [리뷰의 재발견 팀] : 이커머스 리뷰 유용성 파악 및 필터링![제 15회 보아즈(BOAZ) 빅데이터 컨퍼런스 - [리뷰의 재발견 팀] : 이커머스 리뷰 유용성 파악 및 필터링](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

![제 15회 보아즈(BOAZ) 빅데이터 컨퍼런스 - [리뷰의 재발견 팀] : 이커머스 리뷰 유용성 파악 및 필터링](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

제 15회 보아즈(BOAZ) 빅데이터 컨퍼런스 - [리뷰의 재발견 팀] : 이커머스 리뷰 유용성 파악 및 필터링

En vedette

En vedette (20)

[한국어] Neural Architecture Search with Reinforcement Learning![[한국어] Neural Architecture Search with Reinforcement Learning](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

![[한국어] Neural Architecture Search with Reinforcement Learning](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

[한국어] Neural Architecture Search with Reinforcement Learning

[BIZ+005 스타트업 투자/법률 기초편] 첫 투자를 위한 스타트업 기초상식 | 비즈업 조가연님 ![[BIZ+005 스타트업 투자/법률 기초편] 첫 투자를 위한 스타트업 기초상식 | 비즈업 조가연님](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

![[BIZ+005 스타트업 투자/법률 기초편] 첫 투자를 위한 스타트업 기초상식 | 비즈업 조가연님](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

[BIZ+005 스타트업 투자/법률 기초편] 첫 투자를 위한 스타트업 기초상식 | 비즈업 조가연님

Machine Learning Foundations (a case study approach) 강의 정리

Machine Learning Foundations (a case study approach) 강의 정리

Similaire à [한국어] Multiagent Bidirectional- Coordinated Nets for Learning to Play StarCraft Combat Games

Similaire à [한국어] Multiagent Bidirectional- Coordinated Nets for Learning to Play StarCraft Combat Games (20)

BOOSTING ADVERSARIAL ATTACKS WITH MOMENTUM - Tianyu Pang and Chao Du, THU - D...

BOOSTING ADVERSARIAL ATTACKS WITH MOMENTUM - Tianyu Pang and Chao Du, THU - D...

Improving the Performance of MCTS-Based μRTS Agents Through Move Pruning

Improving the Performance of MCTS-Based μRTS Agents Through Move Pruning

QMIX: monotonic value function factorization paper review

QMIX: monotonic value function factorization paper review

MLSEV. Logistic Regression, Deepnets, and Time Series

MLSEV. Logistic Regression, Deepnets, and Time Series

Create a Scalable and Destructible World in HITMAN 2*

Create a Scalable and Destructible World in HITMAN 2*

DutchMLSchool. Logistic Regression, Deepnets, Time Series

DutchMLSchool. Logistic Regression, Deepnets, Time Series

Pixelor presentation slides for SIGGRAPH Asia 2020

Pixelor presentation slides for SIGGRAPH Asia 2020

Dernier

Ashok Vihar Call Girls in Delhi (–9953330565) Escort Service In Delhi NCR PROVIDE 100% REAL GIRLS ALL ARE GIRLS LOOKING MODELS AND RAM MODELS ALL GIRLS” INDIAN , RUSSIAN ,KASMARI ,PUNJABI HOT GIRLS AND MATURED HOUSE WIFE BOOKING ONLY DECENT GUYS AND GENTLEMAN NO FAKE PERSON FREE HOME SERVICE IN CALL FULL AC ROOM SERVICE IN SOUTH DELHI Ultimate Destination for finding a High Profile Independent Escorts in Delhi.Gurgaon.Noida..!.Like You Feel 100% Real Girl Friend Experience. We are High Class Delhi Escort Agency offering quality services with discretion. We only offer services to gentlemen people. We have lots of girls working with us like students, Russian, models, house wife, and much More We Provide Short Time and Full Night Service Call ☎☎+91–9953330565 ❤꧂ • In Call and Out Call Service in Delhi NCR • 3* 5* 7* Hotels Service in Delhi NCR • 24 Hours Available in Delhi NCR • Indian, Russian, Punjabi, Kashmiri Escorts • Real Models, College Girls, House Wife, Also Available • Short Time and Full Time Service Available • Hygienic Full AC Neat and Clean Rooms Avail. In Hotel 24 hours • Daily New Escorts Staff Available • Minimum to Maximum Range Available. Location;- Delhi, Gurgaon, NCR, Noida, and All Over in Delhi Hotel and Home Services HOTEL SERVICE AVAILABLE :-REDDISSON BLU,ITC WELCOM DWARKA,HOTEL-JW MERRIOTT,HOLIDAY INN MAHIPALPUR AIROCTY,CROWNE PLAZA OKHALA,EROSH NEHRU PLACE,SURYAA KALKAJI,CROWEN PLAZA ROHINI,SHERATON PAHARGANJ,THE AMBIENC,VIVANTA,SURAJKUND,ASHOKA CONTINENTAL , LEELA CHANKYAPURI,_ALL 3* 5* 7* STARTS HOTEL SERVICE BOOKING CALL Call WHATSAPP Call ☎+91–9953330565❤꧂ NIGHT SHORT TIME BOTH ARE AVAILABLE

Call Girls In Shalimar Bagh ( Delhi) 9953330565 Escorts Service

Call Girls In Shalimar Bagh ( Delhi) 9953330565 Escorts Service9953056974 Low Rate Call Girls In Saket, Delhi NCR

Saudi Arabia [ Abortion pills) Jeddah/riaydh/dammam/+966572737505☎️] cytotec tablets uses abortion pills 💊💊

How effective is the abortion pill? 💊💊 +966572737505) "Abortion pills in Jeddah" how to get cytotec tablets in Riyadh " Abortion pills in dammam*💊💊

The abortion pill is very effective. If you’re taking mifepristone and misoprostol, it depends on how far along the pregnancy is, and how many doses of medicine you take:💊💊 +966572737505) how to buy cytotec pills

At 8 weeks pregnant or less, it works about 94-98% of the time. +966572737505[ 💊💊💊

At 8-9 weeks pregnant, it works about 94-96% of the time. +966572737505)

At 9-10 weeks pregnant, it works about 91-93% of the time. +966572737505)💊💊

If you take an extra dose of misoprostol, it works about 99% of the time.

At 10-11 weeks pregnant, it works about 87% of the time. +966572737505)

If you take an extra dose of misoprostol, it works about 98% of the time.

In general, taking both mifepristone and+966572737505 misoprostol works a bit better than taking misoprostol only.

+966572737505

Taking misoprostol alone works to end the+966572737505 pregnancy about 85-95% of the time — depending on how far along the+966572737505 pregnancy is and how you take the medicine.

+966572737505

The abortion pill usually works, but if it doesn’t, you can take more medicine or have an in-clinic abortion.

+966572737505

When can I take the abortion pill?+966572737505

In general, you can have a medication abortion up to 77 days (11 weeks)+966572737505 after the first day of your last period. If it’s been 78 days or more since the first day of your last+966572737505 period, you can have an in-clinic abortion to end your pregnancy.+966572737505

Why do people choose the abortion pill?

Which kind of abortion you choose all depends on your personal+966572737505 preference and situation. With+966572737505 medication+966572737505 abortion, some people like that you don’t need to have a procedure in a doctor’s office. You can have your medication abortion on your own+966572737505 schedule, at home or in another comfortable place that you choose.+966572737505 You get to decide who you want to be with during your abortion, or you can go it alone. Because+966572737505 medication abortion is similar to a miscarriage, many people feel like it’s more “natural” and less invasive. And some+966572737505 people may not have an in-clinic abortion provider close by, so abortion pills are more available to+966572737505 them.

+966572737505

Your doctor, nurse, or health center staff can help you decide which kind of abortion is best for you.

+966572737505

More questions from patients:

Saudi Arabia+966572737505

CYTOTEC Misoprostol Tablets. Misoprostol is a medication that can prevent stomach ulcers if you also take NSAID medications. It reduces the amount of acid in your stomach, which protects your stomach lining. The brand name of this medication is Cytotec®.+966573737505)

Unwanted Kit is a combination of two mediciAbortion pills in Doha Qatar (+966572737505 ! Get Cytotec

Abortion pills in Doha Qatar (+966572737505 ! Get CytotecAbortion pills in Riyadh +966572737505 get cytotec

(NEHA) Call Girls Katra Call Now: 8617697112 Katra Escorts Booking Contact Details WhatsApp Chat: +91-8617697112 Katra Escort Service includes providing maximum physical satisfaction to their clients as well as engaging conversation that keeps your time enjoyable and entertaining. Plus, they look fabulously elegant, making an impression. Independent Escorts Katra understands the value of confidentiality and discretion; they will go the extra mile to meet your needs. Simply contact them via text messaging or through their online profiles; they'd be more than delighted to accommodate any request or arrange a romantic date or fun-filled night together. We provide: (NEHA) Call Girls Katra Call Now 8617697112 Katra Escorts 24x7

(NEHA) Call Girls Katra Call Now 8617697112 Katra Escorts 24x7Call Girls in Nagpur High Profile Call Girls

In the energy sector, the use of temporal data stands as a pivotal topic. At GRDF, we have developed several methods to effectively handle such data. This presentation will specifically delve into our approaches for anomaly detection and data imputation within time series, leveraging transformers and adversarial training techniques.Anomaly detection and data imputation within time series

Anomaly detection and data imputation within time seriesParis Women in Machine Learning and Data Science

Dernier (20)

Junnasandra Call Girls: 🍓 7737669865 🍓 High Profile Model Escorts | Bangalore...

Junnasandra Call Girls: 🍓 7737669865 🍓 High Profile Model Escorts | Bangalore...

Escorts Service Kumaraswamy Layout ☎ 7737669865☎ Book Your One night Stand (B...

Escorts Service Kumaraswamy Layout ☎ 7737669865☎ Book Your One night Stand (B...

Al Barsha Escorts $#$ O565212860 $#$ Escort Service In Al Barsha

Al Barsha Escorts $#$ O565212860 $#$ Escort Service In Al Barsha

Call Girls In Shalimar Bagh ( Delhi) 9953330565 Escorts Service

Call Girls In Shalimar Bagh ( Delhi) 9953330565 Escorts Service

Abortion pills in Doha Qatar (+966572737505 ! Get Cytotec

Abortion pills in Doha Qatar (+966572737505 ! Get Cytotec

(NEHA) Call Girls Katra Call Now 8617697112 Katra Escorts 24x7

(NEHA) Call Girls Katra Call Now 8617697112 Katra Escorts 24x7

Chintamani Call Girls: 🍓 7737669865 🍓 High Profile Model Escorts | Bangalore ...

Chintamani Call Girls: 🍓 7737669865 🍓 High Profile Model Escorts | Bangalore ...

Call Girls Hsr Layout Just Call 👗 7737669865 👗 Top Class Call Girl Service Ba...

Call Girls Hsr Layout Just Call 👗 7737669865 👗 Top Class Call Girl Service Ba...

Anomaly detection and data imputation within time series

Anomaly detection and data imputation within time series

[한국어] Multiagent Bidirectional- Coordinated Nets for Learning to Play StarCraft Combat Games

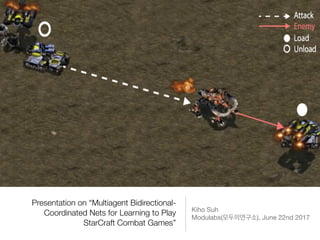

- 1. Presentation on “Multiagent Bidirectional- Coordinated Nets for Learning to Play StarCraft Combat Games” Kiho Suh Modulabs( ), June 22nd 2017

- 2. About Paper • Published on March 29th 2017 (v1) • Updated on June 20th 2017 (v3) • Alibaba, University College London • https://arxiv.org/pdf/ 1703.10069.pdf

- 3. Motivation • Single-Agent AI . (Atari, Baduk, Texas Hold’em ) • . Artificial General Intelligence ? • AI agent . • real-time strategy (RTS) game “StarCraft” . • play , “StarCraft” . • Parameter space joint learning approach .

- 4. ? agent

- 5. ? communication . communication protocol . : multi-agent bidirectionally-coordinated network (BiCNet) with a vectorized extension of actor- critic formulation

- 6. ? • agent BiCNet . • evaluation-decision-making process . • Parameter dynamic grouping . • AI agent . • label data BiCNet agent .

- 8. Related works • Jakob Foerster, Yannis M Assael, Nando de Freitas, and Shimon Whiteson. Learning to communicate with deep multi-agent reinforcement learning. NIPS 2016. • Sainbayar Sukhbaatar, Rob Fergus, et al. Learning multiagent communication with backpropagation. NIPS 2016.

- 9. Differentiable Inter-Agent Learning (Jakob Foerster et al. 2016) • agent agent Q RNN time-step transfer . • times-step agent transfer . • Agent agent , agent observation action .

- 10. Differentiable Inter-Agent Learning & Reinforced Inter-Agent Learning (Jakob Foerster et al. 2016) • (non-stationary environments) . • Starcraft real-trim strategy (RTS) .

- 11. CommNet (Sainbayar Sukhbaatar et al. 2016) • Multi-agent . • passing the averaged message over the agent modules between layers • fully symmetric, so lacks the ability of handle heterogeneous agent types

- 12. BiCNet

- 13. Stochastic Game of N agents and M opponents • S agent state space • Ai Controller agent i action space, i ∈ [1, N] • Bj enemy j action space, j ∈ [1, M] • T : S x A N x B M -> S environment deterministic transition function • Ri : S x A N x B M -> R agent/enemy i reward function, i ∈ [1, N+M] * agent( , ) action space .

- 14. Global Reward • Continuous action space to reduce the redundancy in modeling the large discrete action space • Reward shaping agent . • Global reward: agent reward .

- 15. Definition of Reward Function • Eq. (1) controlled agent . enemy global reward . controlled agent enemy 0 . zero-sum game! • . reward . (controlled agents) reduced health level for agent j (enemies)

- 16. Minimax Game • Controlled agent expected sum of discounted rewards policy . • Enemy joint policy expected sum . optimal action-state value function

- 17. Sampled historical state-action pairs (s, b) of the enemies • Minimax Q-learning . Eq. (2) Q-function modelling . • fictitious play( ) enemies policy bφ . - AI agent fictions play . Controlled agents( ) enemies player . Eq.(2) Q-function . - , supervised learning deterministic policy bφ . • Policy network sampled historical state-action pairs(s,b) .

- 18. Simpler MDP problem Enemies policy , Eq. (2) Stochastic Game MDP .

- 19. Eq. (1) • Eq. (1) global reward Eq. (1) zero- sum game local collaboration reward function team collaboration . • agent collaboration . • Eq. (1) agent local reward agent .

- 20. Extension of formulation of Eq. (1) • agent i top-K(i) • k reward. • agent top-K . • Eq (1) .

- 21. Bellman equation for agent i • N numbers, i ∈ {1, ..., N}

- 22. Objective as an expectation • action space model-free policy iteration . • Qi gradient policy vectorized version of deterministic policy gradient (DPG) .

- 23. Final Equation (Actor) • agents rewards gradient agent action backpropagate gradient parameter backpropagate .

- 24. Final Equation (Critic) • Off-policy deterministic actor-critic • critic: off-policy action-value function .

- 25. Actor-Critic networks • Ready to use SGD to compute the updates for both the actor and critic networks • backprop .

- 27. BiCNet • Bi-directional RNN actor-critic .

- 28. Design of the two networks • Parameter agent agent . agent . • agent training test agent . • bi-directional RNN agent . • Full dependency among agents because the gradients from all the actions in Eq. (9) are efficiently propagated through the entire networks • Not fully symmetric, and maintaining certain social conventions and roles by fixing the order of the agents that join the RNN. Solving any possible tie between multiple optimal joint actions

- 29. Experiments • BicNet agent built-AI . • . •

- 30. Experiments • easy combats - {3 Marines vs. 1 Super Zergling} - {3 Wraiths vs. 3 Mutalisks} • difficult combats - {5 Marines vs. 5 Marines} - {15 Marines vs. 16 Marines} - {20 Marines vs. 30 Zerglings} - {10 Marines vs. 13 Zerglings} - {15 Wraiths vs. 17 Wraiths} • heterogeneous combats - {2 Dropships and 2 Tanks vs. 1 Ultralisk} Marine Zergling Wraith Mutalisk Dropship Ultralisk Siege Tank all images are from http://starcraft.wikia.com/wiki/

- 31. Baselines • Independent controller (IND): agent . . • Fully-connected (FC): agent fully-connected. . • CommNet: agent multi-agent • GreedyMDP with Episodic Zero-Order Optimization (GMEZO): conducting collaborations through a greedy update over MDP agents, as well as adding episodic noises in the parameter space for explorations

- 32. Action space for each individual agent • 3 dimensional real vector • 1st dimension: ranging from -1 to 1 - Greater than or equal to 0, agent attacks - otherwise, agent moves • 2nd and 3rd dimension: degree and distance, collectively indicating the destination that the agent should move or attack from its current location

- 33. Training • Nadam optimizer • learning rate = 0.002 • 800 episodes (more than 700k steps)

- 34. Simple Experiment • tested on 100 independent games • skip frame: how many frames we should skip for controlling the agents actions • when batch_size is 32 (highest mean Q-value after 600k training steps) and skip_frame is 2 (highest mean Q-value after between 300k and 600k) has the highest winning rate.

- 35. Simple Experiment • Letting 4~6 agents work together as a group can efficiently control individual agents while maximizing damage output. • Fig 3, 4~5 as group size would help achieve best performance. • Fig 4, the convergence speed by plotting the winning rate against the number of training episodes.

- 36. Performance Comparison • BicNet is trained over 100k steps • measuring the performance as the average winning rate on 100 test games • when the number of agents goes beyond 10, the margin of performance between BiCNet and the second best starts to increase

- 37. Performance Comparison • “5M vs. 5M”, key factor to win is to “focus fire” on the weak. • As BicNet has built-in design for dynamic grouping, small number of agents (such as “5M vs. 5M”) does not suffice to show the advantages of BiCNet on large-scale collaborations. • For “5M vs. 5M”, BicNet only needs 10 combats before learning the idea of “focus fire,” achieving 85% win rate, whereas CommNet needs at least 50 episodes with a much lower winning rate

- 38. Visualization • “3 Marines vs. 1 Super Zergling” when the coordinated cover attack has been learned. • Collected values in the last hidden layer of the well-trained critic network over 10k steps. • t-SNE

- 39. Strategies to Experiment • Move without collision • task. . • Hit and run • task. . • Cover attack • task. . • Focus fire without overkill • task. . • Collaboration between heterogeneous agents • task. .

- 41. Coordinated moves without collision (3 Marines (ours) vs. 1 Super Zergling) • The agents move in a rather uncoordinated way, particularly, when two agents are close to each other, i.e. one agent may unintentionally block the other’s path. • After 40k steps in around 50 episodes, the number of collisions reduces dramatically.

- 42. Winning rate against difficult settings

- 43. Hit and Run tactics (3 Marines (ours) vs. 1 Zealot) Move agents away if under attack, and fight back when feel safe again.

- 44. Coordinated Cover Attack (4 Dragoons (ours) vs. 2 Ultralisks) • Let one agent draw fire or attention from the enemies. • At the meantime, other agents can take advantage of the time or distance gap to cause more harms.

- 45. Coordinated Cover Attack (3 Marines (ours) vs. 1 Zergling)

- 46. Focus fire without overkill (15 Marines (ours) vs. 16 Marines) • How to efficiently allocate the attacking resources becomes important. • The grouping design in the policy network serves as the key factor for BiCNet to learn “focus fire without overkill.” • Even with the decreasing of our unit number, each group can be dynamically resigned to make sure that the 3~5 units focus on attacking on same enemy.

- 47. Collaborations between heterogeneous agents (2 Dropships and 2 tanks vs. 1 Ultralisk) • A wide variety of types of units in Starcraft • Can be easily implemented in BicNet

- 48. Further to Investigate after this paper • Strong correlation between the specified reward and the learned policies • How the policies are communicated over the networks among agents • Whether there is a specific language that may have emerged • Nash Equilibrium when both sides are played by deep multi agent models.

- 49. – Youtube “ ” “ xx xx xx xx ” “ 20 …” !